Honest Review of OpenAI DevDay

We lived to tell the story

6 Nov marked OpenAI’s first conference: OpenAI DevDay. It was the biggest event in AI.

I was there and lived to tell the story!

Sounds dramatic but many startups died that day, and we were not one of them.

Here’s my honest review of what was announced and where OpenAI is trying to position itself.

Here’s what we’ll cover

What was announced at OpenAI DevDay

Several mini-announcements with major impact

OpenAI + Microsoft

GPTs

Assistants API

Evening reception at the de Young Museum.

After party with Grimes

Summary

Who was at OpenAI DevDay

The name itself: ‘DevDay’ was showing it was aimed at developers.

I could count ~1000 people at the event and most were not developers at all.

Based on the sample of the people I met, I’d guess the distribution was the following:

1/3 OpenAI team or companies closely affiliated with OpenAI (e.g. ChatGPT Enterprise distribution partners, BCG consultants, freelancers doing stuff for OpenAI)

1/3 students or recent graduates. I’m unsure why there were so many of them but I can imagine this was a recruitment technique

1/3 miscellaneous. I was lucky to be among those invited to OpenAI DevDay and I think I fall in the misc group. Among the rest of the people (like me) I could not see a common thread – they were anything from educators, through researchers, through entrepreneurs – a mixed bag. The main thing we had in common was that we were not in the first 2 groups.

To gossip a bit - among the people I saw that day were: Sam Altman (CEO of OpenAI), Satya Nadela (CEO of Microsoft), Greg Brockman (President, Chairman, & Co-founder of OpenAI), Grimes (world-famous for being mother of Elon Musk’s children but highly appreciated in Silicon Valley for all her other talents) and many others famous in the AI community.

The little fanboy in me was very excited!

The venue was probably the most convenient conference venue I’ve been to – it was on several levels, while one of them (where the food was) was completely outside (OpenAIR they called it). Kudos!

Schedule

08:30 AM – Doors open & breakfast is available

10:00 AM – Keynote begins

11:00 AM – Lunch & demo lounge opens

11:30 AM – 12:15 PM New Products Deepdive

11:30 AM – Breakout sessions begin

12:30 PM – 1:15 PM Maximizing LLM Performance

01:30 PM – 2:15 PM The Business of AI

02:30 PM – 3:15 PM AI Frontiers 03:30 PM – Content ends

05:00 PM – Evening reception at the de Young Museum

We went in around 8.30AM, had breakfast and networked until 10AM.

At 10AM Sam Altman held the keynote.

Right after the keynote… most of the people disappeared. There were 2 stages with events going on all the time but those were mostly empty.

It was such a Bay Area experience – people came for the keynote and went to work, I guess? Who does full day events on a Monday anyways…

There were also the ‘Breakout sessions’, where OpenAI was getting ideas from the community on what to do next. Many of the important meetings happened in these breakout sessions behind closed doors.

This involved mainly the first 1/3 of the people at the event.

What was announced at OpenAI DevDay

By now a lot of this info has reached you but in this analysis, I’ll not only present the information but also what it means for OpenAI, Team-GPT (and companies like us), and the whole ecosystem.

These impressions are based on 150+ conversations I’ve had between 3 Nov and 9 Nov in the Bay area.

The success of ChatGPT

The day started with a celebration of ChatGPT numbers: 2M developers, 92% of Fortune 500 companies, 100M weekly active users.

2M Developers. What does developers even mean? It means you are using an API key to do something with OpenAI. Could be to build an app, integrate LLMs in your existing product or simply chat through the API. Chances are you (dear reader) are also a developer according to this measure.

92% of Fortune 500. This number sounded like a playbook from Sam Altman’s YC past - a rather ambiguous number, with not much context which sounds very impressive.

100M weekly active users was the highlight for me.First, because I do believe ‘weekly active users’ is the right measure for ChatGPT usage (at Team-GPT we use the same). The reason is that we are not quite at the point where people use ChatGPT every day. Judging by our own users, 2-3 times per week is the norm. Some tasks just can’t be ChatGPT-ed yet or we haven’t found all the use cases. But soon GPT usage will become daily – similar to how people adopted Google. First for specific use cases, now Google is literally ‘the front page of the Internet’.

On the topic of users, I want to elaborate a bit more.

100M sounds like a lot but it isn’t. Nothing was said about GPT-4 usage vs GPT-3 usage during the conference but crosschecking with other sources, only 2M of those are using GPT-4 (which is the real deal).

GPT-4 = paid users who are getting the actual value out of the product.

2M weekly active GPT-4 users out of 2B people who will need to use ChatGPT for their work in the next couple of years.

2M / 2B means we’ve got < 0.1% GPT-4 adoption.

99.9% of the population is not using GPT-4.

Even if we count the 100M that Sam Altman quoted, humanity is at <5% adoption (if we assume that these 100M have really ‘adopted’ ChatGPT).

Yet not a single word was said to address this growing gap between those who have adopted and those who haven’t.

GPT-4 usage gap means we’ve got AI-enabled humans, competing with simple humans.

We talk about the ‘gender pay gap’, ‘race pay gap’, etc. Those would be NOTHING compared to the AI pay gap. People using AI can and will make 3x, 5x, 100x more than the others.

And at the OpenAI conference and in San Fransisco as a whole, this whole ‘AI-adoption gap’ was rather funny as a concept – another way in which people in tech are ‘superior’ to everyone else.

If you are a part of the ‘everyone else’ group, don’t be antagonized by this statement. Instead, start using GPT-4 immediately and catch up. I’ve created a FREE course: ’ChatGPT for Work: The Interactive Course’ to help you with this.

Actually, the only time we addressed this was in the Uber going from one venue to another. Over there, a kind Mexican lady suggested we share the Uber – something I had never seen in America before! Either way, she understood it because she was not working in the tech industry, she was working with tech industry.

Several mini-announcements with major impact

Most other articles on the topic start here. I’m sure many of them will be more detailed but I’ll try to add my perspective on the impact of each of these.

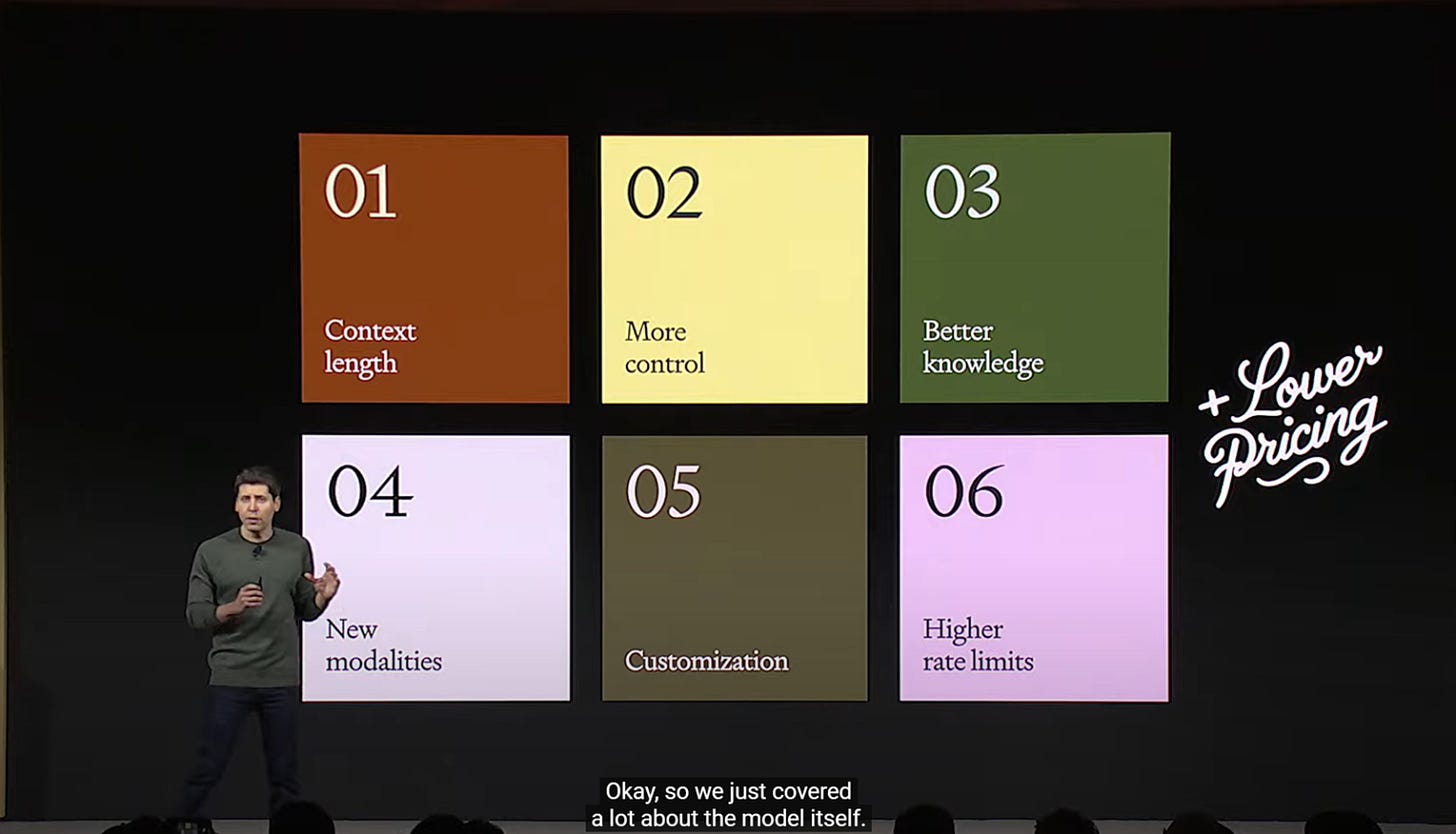

1. GPT-4 Turbo and 128K Context Length

Woah! This was huge!

As Sam Altman put it – you can now feed 300 pages at once as context.

The main GPT-4 competitor is Anthropic (with its model Claude). Claude had one big advantage over ChatGPT, it could take up to 100K tokens as context (~75K words), while GPT-4 was limited to 8K tokens (~6K words).

With this move, OpenAI literally annihilated Claude’s only advantage.

By the way, Amazon recently invested $4B in Anthropic.

This announcement must have hurt both Anthropic and Amazon, so I’m curious to see how they respond.

2. More control through the API

Function calling is now more advanced.

You, dear reader, are probably not that technical (as my audience normally isn’t), so I’ll just scratch the surface of this one.

OpenAI is allowing companies like ours to now create plugins, agents, seed (for reproducibility) and more.

This was one of the biggest hurdles for developers (like us) and these updates will allow us to build better products.

Thanks, OpenAI - with the caveat: all of this must happen within OpenAI’s ecosystem.

Not that sexy but we at Team-GPT are happy with this development.

3. Knowledge cut-off is now April 2023, not September 2021

The knowledge cut off is pretty annoying, right? But as Sam Altman put it: it is even more annoying to OpenAI.

When ChatGPT came out in Oct 2022, they were 1 year behind (data until Sep 2021).

During DevDay in Nov 2023, they updated the cut-off to April 2023, so they are now 6 months behind.

OpenAI is narrowing the gap, but LLMs (GPT models) do NOT have contemporary knowledge by design. This is also why many people don’t want to use them or don’t trust them. This is also the main advantage of Google Bard.

OpenAI has a fundamental problem with this and I’m curious to see how they go about it.

Sam couldn’t fool us on this one!

4. New modality

GPT-4 Turbo with vision, DALL-E 3, new text to speech all available through the API. This is generally great news for developers. Now we can do much more with the API and are happy to experiment!

DALL-E 3 is now the best image generation API out there and on the same day, Coca-Cola launched a campaign produced with the new DALL-E 3.

This was a real flex!

But the really big one for me was text to speech.

Check the Text to Speech of OpenAI over here.

It’s quite something – a text to speech which I would call almost perfect.

Soon available through the API through Whisper V3.

5. GPT-4 fine tuning + Custom models

This is where it becomes interesting.

Sam mentioned that GPT-4 fine tuning will be available – and they really had a lot of pressure to do so. Based on my experience, I know very few people who have had success with fine-tuning. Moreover, fine-tuning GPT-3 is rather pointless, since even the best GPT-3 fine-tuned model is still worse than the generic GPT-4 (with very, very edge case exceptions). Yet to see good implementations of this.

Custom models, however, are something else.

Custom models are apparently the possibility to hire OpenAI to train a custom model for your company. This sounds very exciting, until you realize ChatGPT still doesn’t offer a team plan, takes weeks, if ever to even schedule a ChatGPT Enterprise demo. But now they will be doing something which is very human-resource intensive (essentially consulting). This is when I laughed a bit because it could be translated as: OpenAI wants all GPT-related business and doesn’t care that they can’t handle it.

Custom models sound like the last thing they want to do if they want to reach AGI. Unless, of course, they train on the data of the clients for whom they are building the custom models. This is the only way in which I see them aligned with working on this.

This reminded me of another meeting.

I remember meeting with the Head of Growth of Hugging Face and asking them how we can work together. We briefly discussed the possibility of consulting and Custom models and he told me: we are doing this – we don’t need anyone to help us. FYI, he replied like this to almost everyone else who asked for partnerships: ‘we are doing this’.

In a similar vein, OpenAI tried to say the same thing during OpenAI DevDay: ‘We’ve got this’.

Although they don’t.

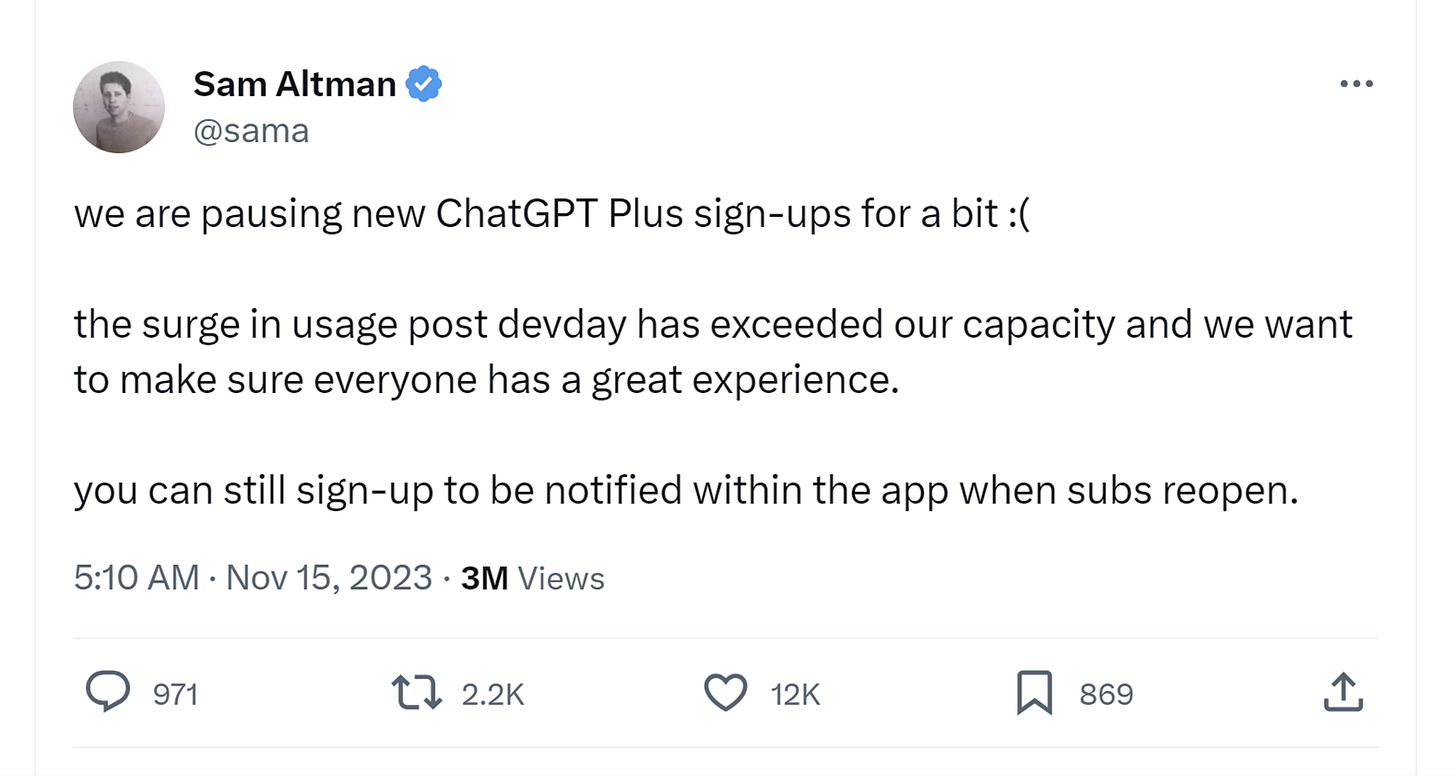

1 year later, they still can’t handle ChatGPT Plus sign-ups.

But they will be offering literally every service ever.

Interestingly, there were many ‘developers’, aka attendees/invitees to the conference who were very much in the business of Custom models. OpenAI is eating them alive, while not being able to provide the desired service for even the basic product to its users.

That’s what infuriated everyone, and we will revisit this again.

6. Higher rate limits + Copyright Shield

Ah, hallelujah!

This is literally what we want, OpenAI. All the heavy users are complaining about rate limits - everywhere, all around the place. This is the first thing I hear when I talk to clients!

They’ve doubled the rate limits to: 2x tokens (for existing customers) and created a procedure to increase your current rate limits (available here).

Here’s the quick overview.

Then again, 2x is nothing. If I’m hitting the limits every minute, then by doubling the tokens, I’ll be probably hitting them even with this 2x increase. This is not allowing devs to scale operations well.

We need 10x to 100x increase in the rate limits and that’s where Microsoft will likely jump in.

Again, all of OpenAI’s resources seem to be aimed at increasing the scope of their activities, rather than the scale.

Finally, the Copyright Shield… As Sam put it: ‘We will step in and defend our customers and pay the costs incurred, if you face legal claims or copyright infringement’.

This is just about everything he said, and we are yet to understand what this means.

Sounds like: OpenAI’s lawyers are my lawyers now – free of charge…

Interested to see how this scales - it’s either no one will need the Copyright Shield, or everyone will need it and the system will collapse.

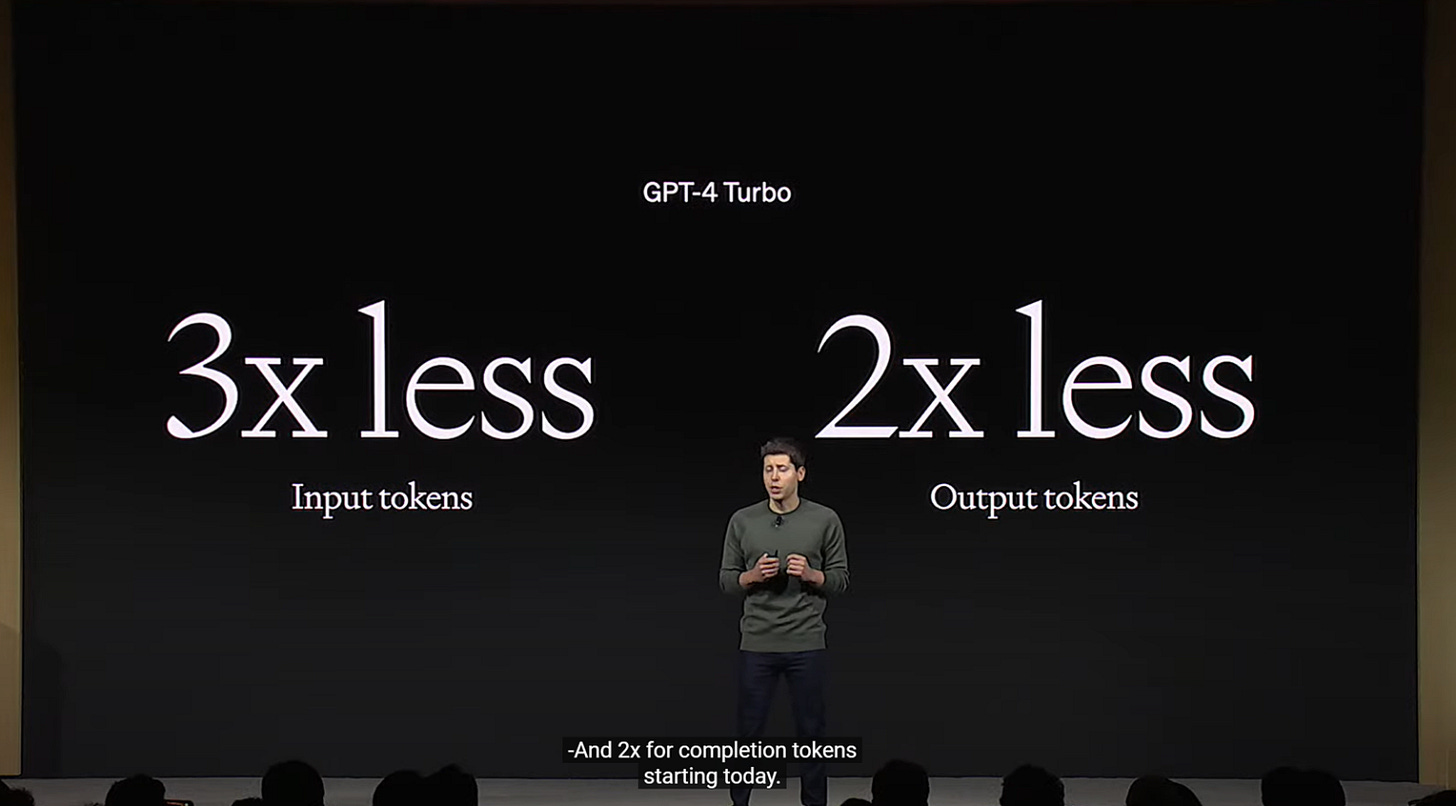

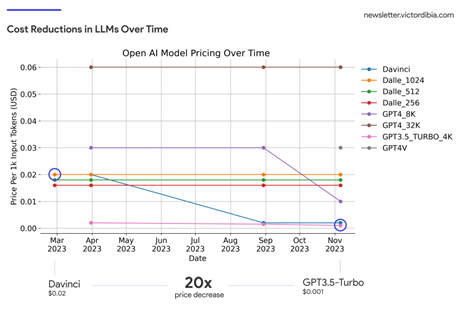

GPT-4 Turbo Pricing

On to the good news – a major price decrease!

The newest model is not only the best one ever but also cheaper than the existing one.

When GPT-4 came out, it was 20x more expensive than GPT-3.

As GPT-4 Turbo is coming out, it is ~2.75x less expensive than GPT-4.

Everyone wanted cheaper models and OpenAI is providing.

But this sounds strange, doesn’t it?

OpenAI is currently leading a natural monopoly. Everyone knows GPT-4 (Turbo) is the best model. I’ve been preaching to everyone everywhere – do not use anything less than GPT-4, it’s not worth your time.

So why are they slashing the price?

I’ve investigated this at length in Sillicon Valley. I asked many people about their point of view and 2 major opinions formed:

LLM costs will be going down in the future and this is a part of the trend.

Defensibility - they are trying to lure more developers ASAP and maybe raise prices later

Both of these make sense and are probably true at the same time.

With the higher scale of operations + the partnership with Microsoft, OpenAI is probably able to slash costs 10x to 100x more, while allowing us to enjoy only 2.75x of it.

This is great news, and we are grateful for the gesture – they could have just continued extorting developers, being the natural monopolist they are.

But I want to touch on the second point – defensibility.

OpenAI has not only increased the context limit to 128K (above that of Anthropic), they are now also slashing the prices. This is making it even harder for others to compete. OpenAI is trying to destroy the competition on every level they can think of.

Given the lower prices, we are all thinking about integrating even further with OpenAI. But nothing is stopping them from changing their mind and increasing the prices 5x in the future. We have seen this in almost every industry ever, most notably cloud, where it is very cheap to get started but then hits very hard as you scale up.

Or simply doing something else…

For instance, pushing 128K tokens through the API at the ‘new lower prices’ amounts to about $1.28/request. And this is the beauty and brutality of the combination of the new features – they allow you to pass 16 times more tokens but charge per token. They give you more, so they can then take more.

One of the first pressure tests which came out on Nov 9 was by Greg Kamradt who found out the 128K tokens model is not that great but cost him $200 to prove it. Here’s the summary:

No Guarantees - Your facts are not guaranteed to be retrieved. Don’t bake the assumption they will into your applications

Less context = more accuracy - This is well know, but when possible reduce the amount of context you send to GPT-4 to increase its ability to recall

Position matters - Also well know, but facts placed at the very beginning and 2nd half of the document seem to be recalled better. Facts placed between 7%-50% document depth are not recalled well.

Other than that, we can still use the old models in the same way we did.

All models are getting about the same price reduction as the one mentioned above. Same applies for fine-tuning GPT-3.5 Turbo.

And that’s only 15 mins into the conference.

A lot more happened later on, and we will go through it all.

I must say, the density of new information at this keynote was out of this world!

OpenAI + Microsoft

About a year ago, Microsoft invested $10B in OpenAI for a 49% stake.

Several months later, we saw Microsoft unroll a bunch of AI-enabled features, most interestingly – the co-pilot, an AI that is supposed to help you with everything (a story for another time).

OpenAI needed a partner – one with a lot of infrastructure. And while Microsoft had a lot of it, it announced it is setting up another $3.2B worth of servers in Australia alone.

This is important because they bought half of OpenAI for $10B. And now they’ll probably invest way more than that to make ‘ChatGPT happen’ because computing is expensive.

That said, Sam and Satya looked like best friends forever (a bit awkward but that’s our usual Sam)!

I must say – that was cool! Having Sam and Satya in one place was really powerful.

Satya was at the keynote for exactly 4 minutes, said a bunch of stuff that sounded ChatGPT generated, Sam dubbed it the ‘most exciting partnership in tech’ and Satya left.

A bit underwhelming in retrospect but REALLY COOL in the heat of the moment.

It was a strong PR stunt and OpenAI are really amazing at PR and marketing.

As a marketer - hats off for this move!

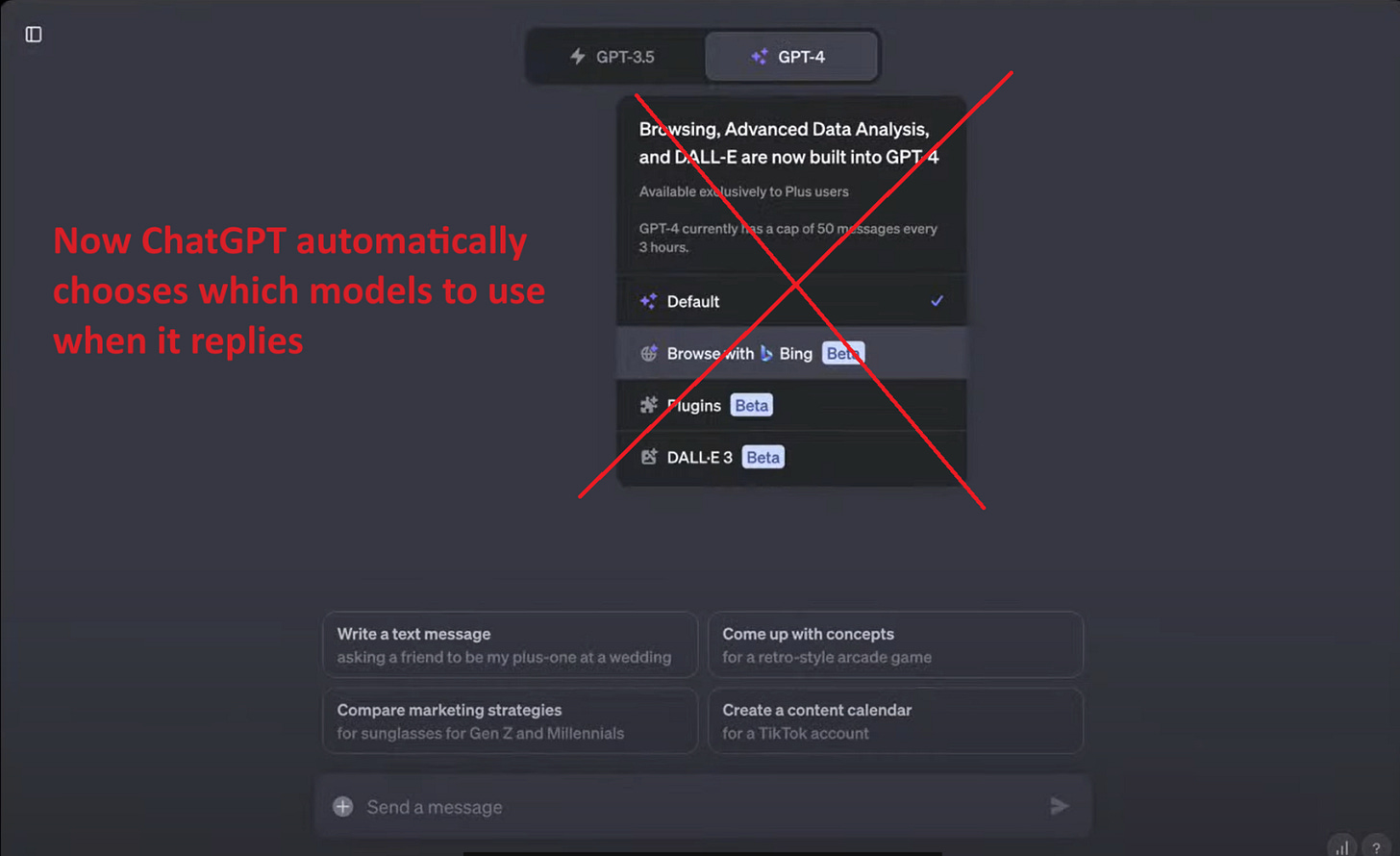

ChatGPT just became ‘dumber’ or why the model picker is gone

Sam began this with ‘we heard your feedback’ about how annoying it is to choose the model every time.

This means that now, whether you are browsing the internet, creating images or using plugins – it is all automatic.

Was it really that annoying? Who was it annoying for?

With the current updates, ChatGPT is now browsing the Internet when I don’t want it to. Creates images when I don’t want it to. Invokes plugins out of nowhere.

Overall, I think it became dumber. But the problem is not that it is dumber.

The problem is: my time from prompt to outcome is MUCH SLOWER.

People use ChatGPT to save time, not to waste time.

And that’s what they’ve achieved with this new feature.

Was this the customer feedback? Definitely not mine.

I remember telling 3 different OpenAI employees how their automatic choice of model is pretty bad. I’ve been providing this feedback since May 23, when I had a long discussion about Plugins.

Now this is my feedback to OpenAI: ChatGPT became much dumber with this product decision. I’m sure it will get better but right now – it is pretty bad.

I have certain information that they’ve put a lot of resources into getting this right and are obsessed with doing so. Yet I still think it is pointless.

I’ll let it slide for now because of an awesome new feature, which I think they got right.

GPTs

Another genius marketing move. Bravi!

What do customers want?

Every customer says the same thing: ‘I want to train my own ChatGPT’.

They took this customer request and introduced GPTs. As they described them: ‘GPTs are a new way for anyone to create a tailored version of ChatGPT to be more helpful… ‘

Here’s an example with an actual GPT that OpenAI launched: The Negotiator.

While I cannot know how exactly they prepared ‘The Negotiator’, my best guess is that it has some custom instructions to be very good at negotiation. Maybe they provided a couple of PDFs with negotiation tactics (but I doubt it – I think core GPT-4 is good enough).

Important: This is not a new model. It is the exact same model but with some differences to its capabilities and instructions. No fine-tuning was made. No new model was created.

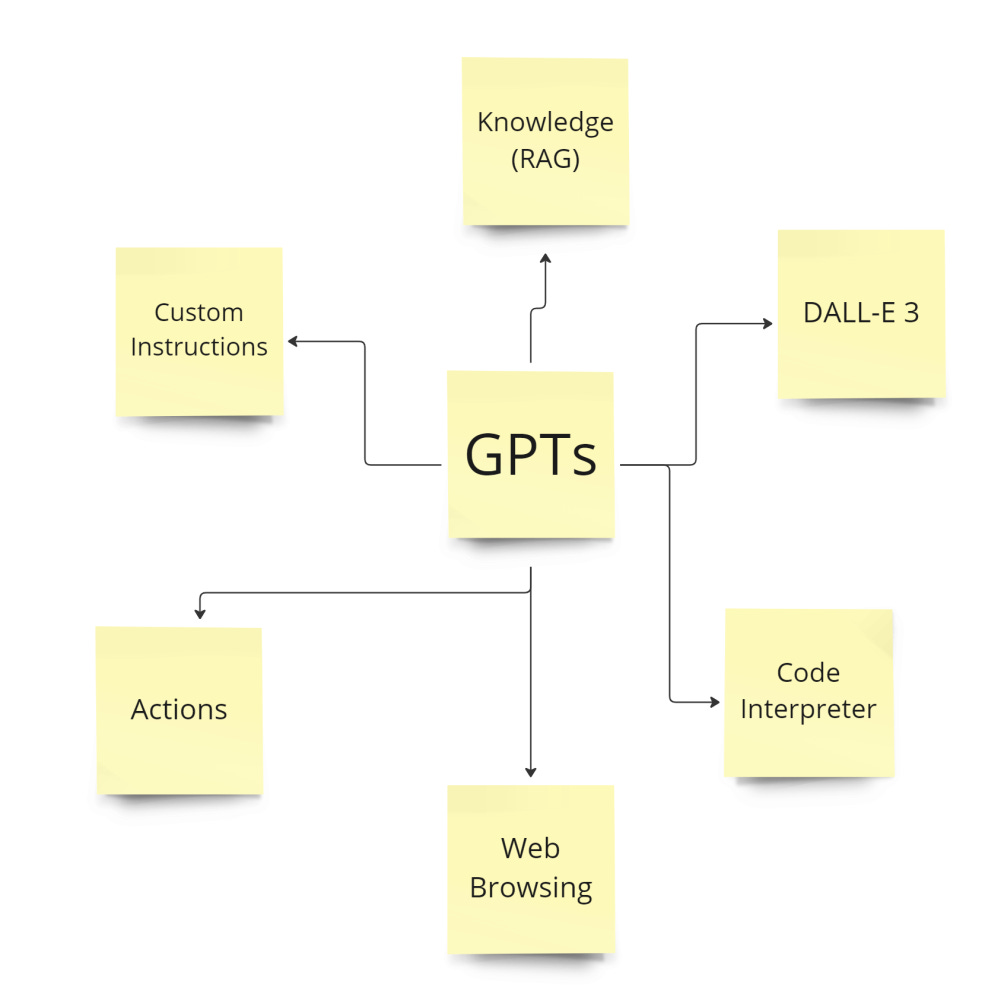

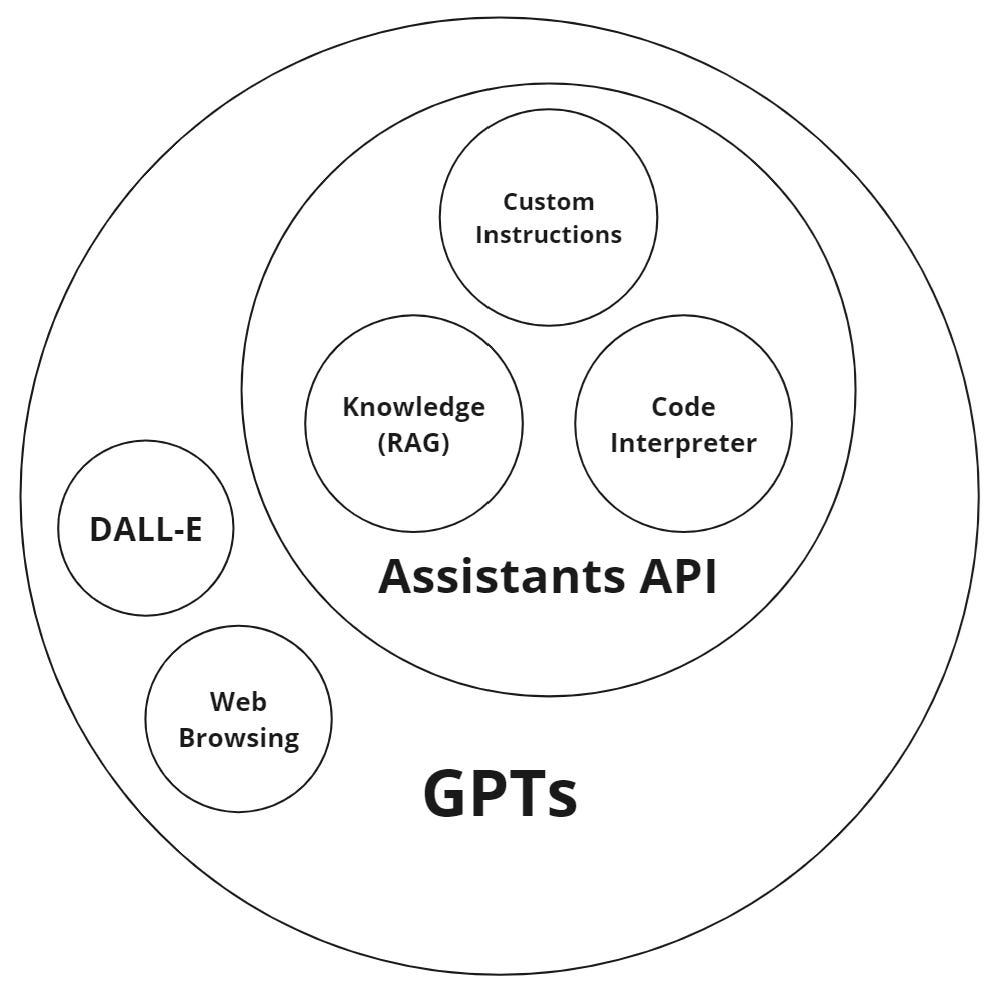

What are GPTs exactly?

GPTs are something like ‘chat templates’.

These chat templates have several configurations which are set at the time of creation. Here are the possible configurations:

1. Custom instructions – this is what makes GPTs so good. Normal chats in ChatGPT all have the same instruction ‘You are ChatGPT a model trained by OpenAI. Respond using markdown’, or something close to this. These instructions are sent with EVERY message (we call this ‘system prompt’). You could change this inside the ChatGPT app using the custom instructions feature (see image below). In fact, using the API you could use custom instructions since 2020. Problem? No one really cared to use custom instructions, although this is probably the most important feature of ChatGPT. Through the new feature: GPTs, custom instructions are embedded in the GPT itself. For ‘The Negotiator’ from above, the custom instruction is something like ‘Act like a professional negotiator. You’ve been trained for many years to negotiate any deal from the smallest business to Enterprise deals with the toughest negotiators in the world’ or something of the sort. Note that millions of different wordings would work ‘good enough’ but you get the gist of it.

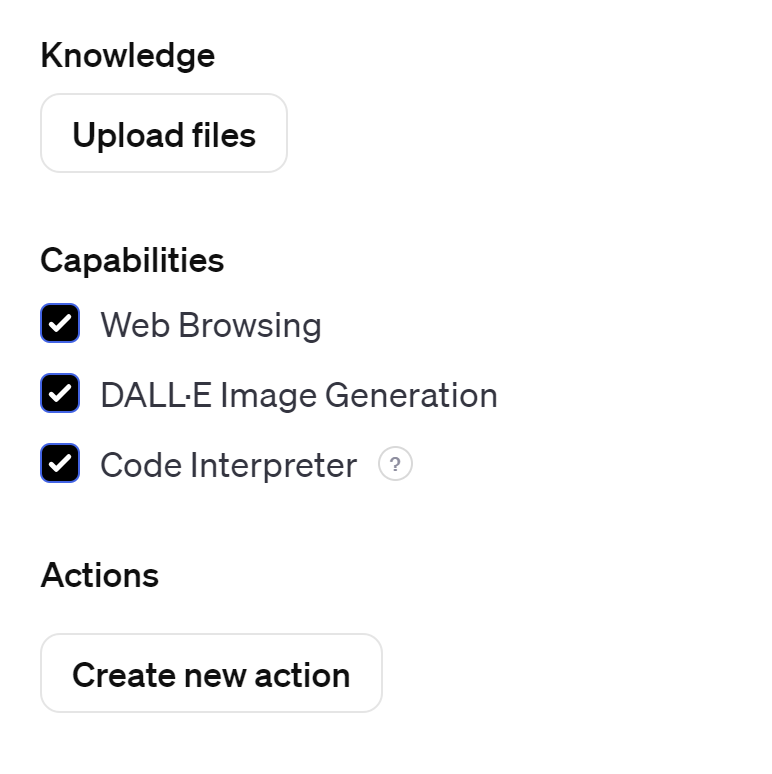

2. Knowledge (files, aka RAG) – Another big feature coming to GPTs. RAG (retrieval-augmented generation) is another important feature. It allows you to add files (PDFs, spreadsheets, and other file types) and use the information from them during your chats. While this feature was available before, GPTs allow you to upload files once and then reuse them in many different chats (e.g. The Negotiator can be used as a chat starter for many chats). Using RAG is also one of the best ways to deal with hallucinations. Therefore, if you configure your GPT correctly you might get rid of many of the hallucination issues, too.

This ‘knowledge’ that we speak of, aka file upload, aka RAG is optional. You don’t have to use any files if you don’t want or need to.

Same applies for the ‘capabilities’: Web browsing, DALL-E Image Generation, and the Code Interpreter

3. Web browsing – We all know that ChatGPT is not great at web browsing (because it uses Bing underneath. Hint: Use Google Bard if you want to search the web). Do we always need it? Not really. ‘The Negotiator’ doesn’t really need to browse the web. Therefore, OpenAI allows us to simply disable the Web browsing for a particular GPT (chat template) and thus save time and resources (both for the user of the GPT and OpenAI itself).

4. DALL·E Image Generation - Your GPTs can also generate images… if you want them to! If not, simply uncheck the box of this capability. I never use it. Frankly, I still prefer Midjourney, but DALL-E 3 is getting there.

5. Code Interpreter – This is what they call ‘Advanced data analysis’ inside ChatGPT. In fact, the code interpreter works like this: you ask a math problem, data analysis problem or a coding issue– something logical, that is. LLMs suck at logic. But they can write good Python code, which when executed… can reach the desired answer. This is what the code interpreter does – allows you to do math, analyze data and more by going through a coding step. For a GPT like ‘The Negotiator’ this is obviously not needed but for a ‘Data scientist’ GPT you would definitely need it.

Based on this, we understand that the GPTs are nothing too special. They are an INTERFACE to simplify or complicate your ChatGPT with custom instructions and other capabilities. Finally, you can also add actions.

6. Actions – You can ask your GPT to perform actions OUTSIDE ChatGPT. For instance, it can ping your API or can send you a Slack message, things like that. This is very interesting in many ways. Its true power? Actions allow your GPT to act as a ‘plugin’. Thanks to the Actions, we now have an easy interface to create plugins or talk to our own apps.

An example

One of the best examples was provided at the end of the keynote by Romain Huet from the OpenAI team.

Watch his example (~5 mins long) from min 33 of the keynote.

Funny story about Romain – I met him at the end of the Dev Day. I didn’t recognize him and I asked: what did you talk about?

Romain: ‘I was a part of the keynote, right after Sam.’

Me: ‘Cool. What did you talk about?’

Romain: ‘It was a full example of how to use GPTs and Assistants API.’

Me: ‘Can you be more specific?’

Romain: ‘I gifted $500 to everyone in the room’ (and he actually built a program live in front of us to send $500 in OpenAI credit to everyone in the room – it was amazing – thanks!)

I said: ‘Ooooh… I remember’.

Truth is.. I remembered the act of giving (for me: getting) $500. But my brain was a total mush by then. It was 10PM in the evening and I had been giving my 120% all day.

On the spot, I couldn’t remember who Romain was or what he talked about.

In retrospect, Romain gave literally THE BEST 5 MINS of the whole conference – succinct, to the point, practical, and super exciting. Watch it.

Romain, if you are reading: Sorry, for the bad taste when we met. Maybe this bad experience will help you remember me!

We can talk about this more but let’s focus on what matters: are GPTs useful?

GPTs are actually genius but I don’t see them being that practical

I must say – I absolutely love the concept of GPTs.

I don’t like the feature because it is oversimplifying a complex prompting task and creating wrong expectations, especially because you cannot see the custom instructions and files provided.

But I love the concept.

Here are 6 reasons why GPTs are genius:

GPTs just sound cool. My own GPT, your GPT, my company’s GPT. Rad! I can guarantee you that this is how customers talk about these things. And now they love that they have ‘GPTs’, although they used to have them before, too!

Everyone is talking about GPTs. ChatGPT is no longer ‘ChatGPT’ alone. It is now eating the whole ‘GPT’ realm. CoachGPT? This is probably a GPT in ChatGPT, right? Or is it this website:

https://www.coach-gpt.ai/

. Not sure… Similarly, our company will probably experience some hit on the name (Team-GPT – is this a GPT where you can do team stuff… or what? No, no. Team-GPT is a different app where teams adopt GPT models - just check us out!!!). By adding this feature, ChatGPT no longer needs to protect the trademark GPT (which they failed to secure). They own the word GPT thanks to GPTs. Kudos!

GPTs make custom instructions cool. 90% of the point of GPTs is the popularization of custom instructions. Custom instructions are so important that it was 1 of the only 5 or so features ChatGPT ever shipped in its 1st year of operations. Yet, people didn’t really get it. Through GPTs, you don’t need to know how to use custom instructions. Simply use GPTs of other people who can.

GPTs = use cases. For many people, GPTs will be the way to find out about new and more use cases. OpenAI don’t have to educate you on use cases, if a GPT exists for your use case.

They are launching a marketplace for GPTs. Anyone in the world can create their own GPT and then share it with the community. This is the best way to popularize them. Give the power to the people and wait for the UGC (user generated content) to flood the market.

OpenAI mentioned they’ll be splitting the revenue with the best GPTs, thus disincentivizing people from taking their best GPTs outside of ChatGPT. Who wouldn’t like to split revenue with ChatGPT?

At the same time, here’s why I’m dubious about them.

One of the initial GPTs (featured on their page here) was:

Your tech advisor will help you with… your printer?

Apparently, GPTs will be created by humans. As long as humans don’t understand this feature well, it will suck. And this was created by the OpenAI team. Imagine the rest of the world…

I don’t think we are ready for the pace of AI development we are seeing right now. OpenAI are pushing 100 things out at the same time, and we still haven’t digested the main one (ChatGPT), which came out a year ago.

This is why I don’t believe in GPTs.

They are too genius for their own good!

Not to mention the fact that I’ll create my own ‘Tech Advisor’ and so will 1 million more people. How do you tell the good ones from the bad ones? With ChatGPT? This has to be thought through.

GPTs are brutal (bad) for the ecosystem

Having in mind that this was DevDay (a developer conference), the announcement of GPTs was rather brutal. It didn’t help developers in any way.

On the contrary… with one swift movement, OpenAI killed a million businesses!

Many of the apps that were built on top of ChatGPT, like ‘coach GPT’ or ‘negotiator GPT’ or ‘tech support GPT’ or whatever. They were all… a ChatGPT wrapper + a smart prompt.

A prompt so smart… it deserves to be an app. (as we joked in our company)

GPTs are just this – your ability to build an ‘app’ inside the ChatGPT app, making ChatGPT an app of apps.

If your business was this fragile – it is already dead.

I’m not defending these businesses, I’m just stating the facts.

Assistants API

Since the target audience for this article is NOT technical people, I’ll go quickly through this one.

OpenAI also announced the Assistants API.

This is a new API which will allow developers to build technologies similar to the GPTs we described above. Or even more – agents, and other curious things.

Right now, through the Assistants API you can get the custom instructions, RAG, code interpreter. At DevDay I was told that the idea is to be able to replicate GPTs through the Assistants API 1:1 => the two should have the exact same capabilities.

However, for now this is the situation:

Is the API good? Don’t know yet but our team is very thrilled about the Assistants API. It will solve many, many problems for us.

First of all – Knowledge (RAG).

Knowledge (RAG, aka retrieval-augmented generation)

As mentioned above, RAG refers to retrieval-augmented generation.

It solves 2 problems:

You can add your own knowledge to the ‘chat’ (simplified), e.g. PDFs, txt files, spreadsheets

It is the best-known way to limit the hallucinations, so many people have raised money and are working on this problem with the hallucinations

While I was in San Francisco, literally everyone was talking about RAG. Most of the conversations went like this:

Person A: RAG, RAG, RAG.

Person B: RAG, RAG?

Person A: RAG!!!

Person B: RAAAAAAAAAAAAG!

I wish I was joking. But since at Team-GPT our main focus is not RAG, we were looked down upon (a bit)… cause if you don’t do RAG, what are you even doing???

We have developed our own Team-GPT RAG. However, to have a good RAG, we anticipated we would have to buy, not build, so we haven’t invested too much. Thanks to OpenAI, we can now use their RAG, which was developed by the worlds’ leading AI engineers. These people get paid around $0.5M-$10M/year, so this saves us a lot of money.

Assistants API - Fatality

At the same time though, many startups were building RAG systems.

If you ask me – this was a very big blow for the whole startup ecosystem. Hundreds of millions of dollars invested in startups that are building different types of retrieval systems. They all got hit very hard by the OpenAI announcement. All these startups now must pivot, while the VCs have just lost a bunch of money.

Whoever was not killed by the GPTs announcement, truly suffered by this one.

It was interesting how little excitement there was in the room about this announcement. No claps.

A bit later during the day I met John Allard, Engineering Lead, Fine-tuning Product Team at OpenAI. He held the talk Maximizing LLM Performance at OpenAI DevDay.

I asked him: ‘Good job on introducing the RAG! I love it but didn’t you slaughter the whole audience?’

John answered: ‘OpenAI’s RAG is quite simplistic. We are handling the general use cases but if you are looking to sell RAGs to Enterprise, you’d need way more than this. Therefore, folks that are working on RAGs should continue doing so… just don’t make them overly simplistic’.

And fair enough. If you are in the business of RAG, you should invest at least 6 months, 1 year in building it. If you built your RAG with LangChain for 1 day, you are not in the business of RAG.

But the bad taste was there. What if they double down on RAG? No one is safe.

Finally… is the API any good?

The Assistants API was free until yesterday, so we couldn’t benchmark the price (and that really matters when you are integrating a new API). The reviews are not thrilling, though…

Evening reception at the de Young Museum

At 4PM they kicked us out of the conference venue. There was an evening reception at the de Young Museum. I must say – it was really stylish!

10 points for OpenAI!

OpenAI also provided us with $100 in Uber credit, so we can move from one venue to the other. Together with the $500 in API credit, this amounted to $600 in gifts (the ticket to attend cost $450), so that was quite nice of them. Thanks!

I shared an Uber with an incredible Mexican lady (that I already mentioned above).

We shared the ride with another person, too. They were closely affiliated with OpenAI, so I don’t want to name them, but the second thing they said after their name was:

‘I’m here to witness the anthropological effect of OpenAI destroying the businesses of all the people in the room. I’m very interested to see how OpenAI handles it.’

Really? You came all this way for this. You didn’t have to make the effort, pal!

I studied in Western Europe + I sell products mainly in the US. But I’m just a guy from Eastern Europe. In our part of the world, when you smell bullshit, you call it out. Especially if it is an elitist type of douchebag-gery – it really triggers us. This was the only fight I picked during OpenAI DevDay. Strategically, I shouldn’t have – because in business you never know. But morally – I had to see the anthropological effect of using my broken Bulgarian accent to shut the posh guy up with ‘smart words’. Rant over. Sorry – but this is an honest review!

If the person in question is reading – sorry dude but there’s a lesson you needed to learn, hope you did!

Either way – almost everyone else was really extraordinary. I’ll tell you about one other guy I met right after the ‘unpleasant debate’ I had.

I met a guy who is leading Product at FreeStyle Libre. This person has literally changed our lives at home.

My wife has diabetes, and our lives often revolve around regulating her blood sugar. In the last few years, there have been major advancements in the field with devices like FreeStyle Libre and Dexcom that help people with diabetes measure their glucose levels continuously and receive notifications (even on your phone) when it is low.

This is an extremely serious issue for millions of people worldwide.

And it was not only him.

Almost everyone at this conference was as impactful as this guy.

But the guy with the biggest impact was surely Sam Altman, the (former? Future?) CEO of OpenAI.

It was possible to simply go and talk to him. And I did!

Thanks for the memory, Sam!

I’ll finish off with something about you.

After party with Grimes

The day was simply never ending.

At 10PM we moved away from the de Young Museum and into a club downtown.

Inside, I saw some weird signs.

A neural network, with a star, saying ‘COME AND TAKE IT’ was leading you to the dance floor. If that’s not a nerd magnet, don’t know what is!

Looking around, I saw 99% guys. Literally there were no more than 10-15 girls in the club.

What could I expect from an after party in a developer dominated field?

The club had a special guest: Grimes.

Oh, and she was good. She was very good!

Grimes is apparently a DJ, artist, musician and many more. They say she is very smart (I haven’t researched her a lot), and everyone was saying ‘Grimes is really into AI and is very smart’. Was she? Probably. But it really doesn’t matter for the work she was doing that night.

She was DJ-ing and it was magnetic.

I had NEVER seen a better fit for a live performer with their audience.

A hot girl, who is apparently smart and into AI, playing some good music in front of 99% guys.

Everyone had a good time. Software engineers dancing like crazy? That was new for me! Many attendees I talked to said this was ‘the best performance they’ve been to’.

Others who had seen Grimes before were thrilled to see her again.

Entranced AI engineers vibing with Grimes… I loved it!

And OpenAI paid for everything!

There was an open bar, there was Grimes and probably more. Interestingly, Grimes was also invited to OpenAI DevDay. There were some theories that this was to make Elon Musk angry but I don’t think anyone cared about him that day.

It was the day of OpenAI.

And to top this off – I learned something new.

Remember the ‘Come and take it’ sign?

There were more.

And more.

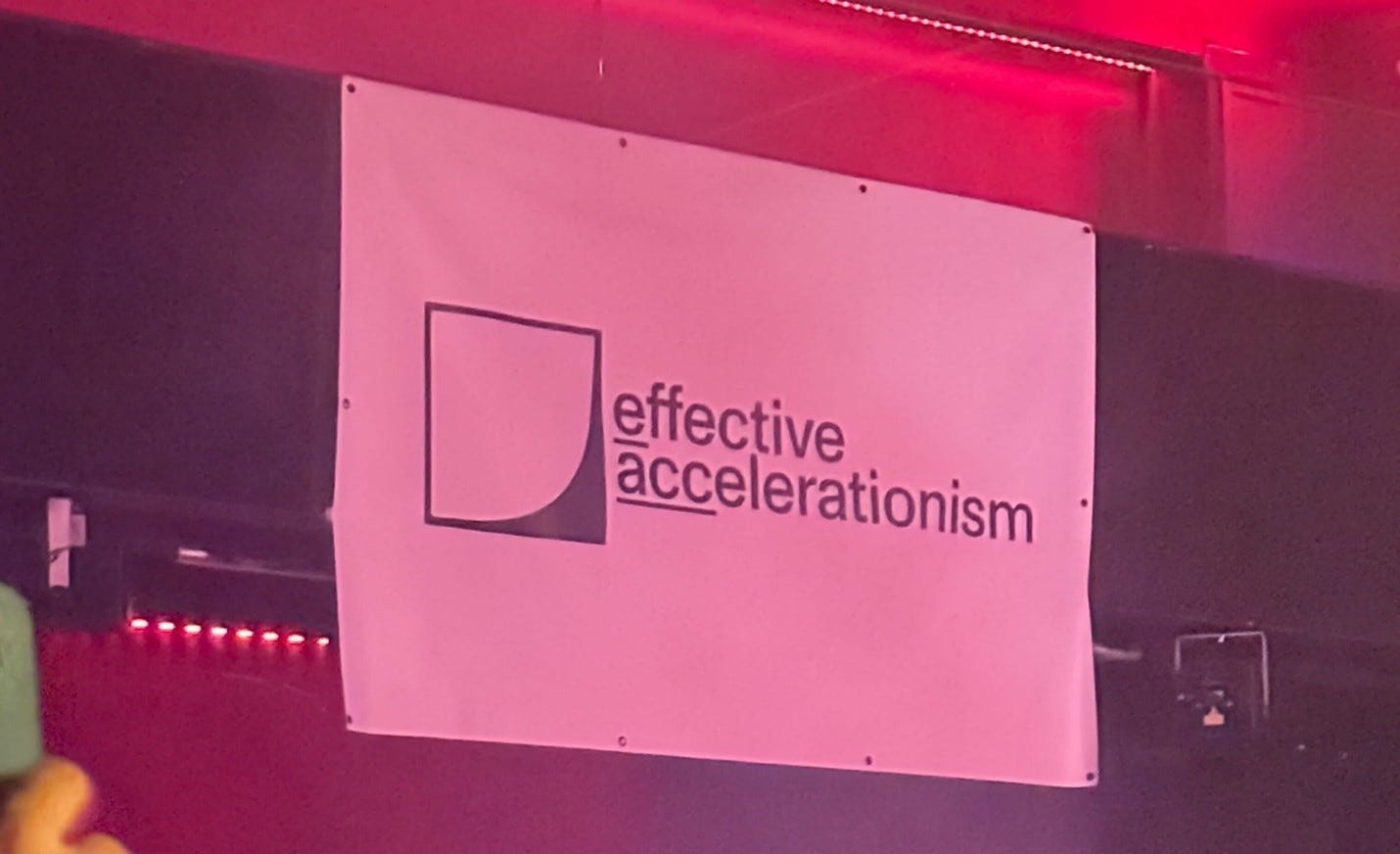

These were placed by a movement I didn’t know about before.

Effective accelerationism.

I asked around… but no one wanted to talk about it.

But everyone knew.

These are the things you can learn only when you go there.

Summary

OpenAI DevDay was far from the best conference I’ve been to, but the event was rather nice.

I’d give it 8/10.

Regarding OpenAI and the future of ChatGPT?

I was going to give it 10/10.

It was the most solid keynote announcement I have ever seen (after Steve Jobs announcing the iPhone).

And no wonder – Sam Altman and Greg Brockman started fundraising literally the same day. This was their plan all along. Greg met with Macron (President of France) and Sam met with important people, too.

But the board of directors didn’t like something… was it the keynote? Was it the fundraising? Was it the ‘acceleration’? So 11 days later, on Nov 17, they fired Sam Altman.

I was at DevDay. Talked to Sam, saw the keynote, understood the stuff he said. I’ve also been in the industry (both AI and startups) for 8 years and this move is unexplainable to me.

As I’m writing this, Sam Altman is no longer CEO, Mira Murati (CTO) is now interim CEO.

It is hard to unpack this, and I don’t think this is the right place but I want to say this:

On 6 Nov 2023 (OpenAI DevDay), OpenAI seemed like the biggest company that will ever exist

On 17 Nov 2023 (after firing Sam Altman), OpenAI seemed like an inevitable crash and burn

The specifics are yet to be made available to outsiders (and insiders lol) but one thing is for sure – the next few days will determine the future of AI.

From the looks of it, Sam Altman will either:

Rejoin OpenAI as CEO, change the board of directors and become stronger than ever

Start a new venture (probably with Greg Brockman), raise a lot of money (investors love him), poach a lot of talent from OpenAI (employees love him), and outperform OpenAI

When I posted my photo with Sam, someone DMed me:

‘You are in the presence of royalty. Sam Altman is the new prince of tech’.

Sam Altman was dethroned as the prince of tech.

But he might return as the king.

🖖

P.S. Latest news are that Sam Altman and Greg Brockman and joining Microsoft in an ‘new advanced AI research team’. Read more here.

P.P.S. Sam Altman is back as CEO of OpenAI. Greg Brockman is also back. The board of directors of OpenAI is now changed with the new one being more supportive of Sam Altman and his vision for OpenAI.